Result Moreover according a recent experiment conducted by Anyscale LLaMA 2 70B is. . Result Yet just comparing the models sizes based on parameters Llama 2s 70B vs. A bigger size of the model isnt. . Both models are highly capable but GPT-4 is more advanced while LLaMA 2 is. Result Llama-2-70b is almost as strong at factuality as gpt-4 and considerably better. Result For optimal factual summarization akin to human accuracy either Llama-2-70b..

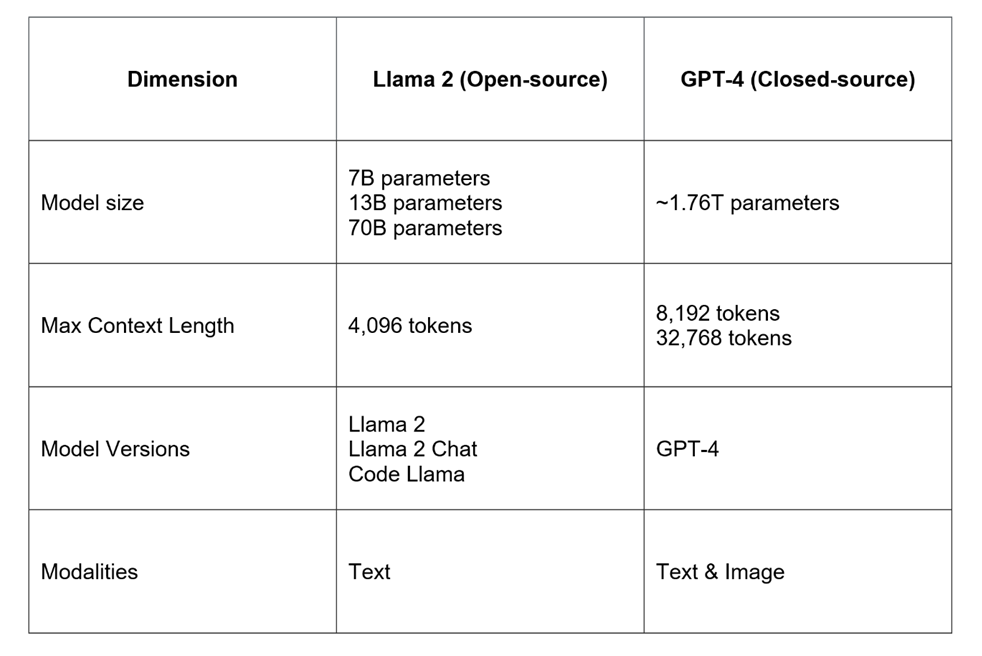

WEB Llama-2 much like other AI models is built on a classic Transformer Architecture To make the 2000000000000 tokens and internal weights easier to handle Meta. Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. WEB The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama 2 is a family of pre-trained and fine-tuned large language models LLMs released by Meta AI in..

If on the Llama 2 version release date the monthly active users of the products or services made available by or for Licensee or Licensees affiliates is. Unlock the full potential of Llama 2 with our developer documentation The Getting started guide provides instructions and resources to start building with Llama 2. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing longer prompts than Llama 1 and is. Llama 2 The next generation of our open source large language model available for free for research and commercial use. Meta and Microsoft announced an expanded artificial intelligence partnership with the release of their new large language model..

Web Models for Llama CPU based inference Core i9 13900K 2 channels works with DDR5-6000 96 GBs Ryzen 9 7950x 2 channels works with DDR5-6000 96 GBs This is an example of. Web Explore all versions of the model their file formats like GGML GPTQ and HF and understand the hardware requirements for local inference Meta has rolled out its Llama-2 family of. Web Some differences between the two models include Llama 1 released 7 13 33 and 65 billion parameters while Llama 2 has7 13 and 70 billion parameters Llama 2 was trained on 40 more data. Web In this article we show how to run Llama 2 inference on Intel Arc A-series GPUs via Intel Extension for PyTorch We demonstrate with Llama 2 7B and Llama 2-Chat 7B inference on Windows and. Web MaaS enables you to host Llama 2 models for inference applications using a variety of APIs and also provides hosting for you to fine-tune Llama 2 models for specific use cases..

Komentar